This one is going to be a little long, guys; I’ve been sick and home alone for the past two days. I spent most of that time asleep, but this blog post is my feeble attempt to feel like those two days weren’t totally wasted.

Once again, most of my favorite science news stories from this past month have been about small children and/or the brain. (I’ve had to cut the list down to just a few things because I couldn’t help rambling about some of them) So I’m going to start with the couple stories that don’t fit those themes. These first ones are both about particle physics. First, here’s some information about the enigma that is dark matter. Nope, physicists haven’t figured it out yet. We still don’t know what exactly dark matter is, except that it has gravitational effects on regular matter. But these scientists say that, based upon the most plausible explanations of dark matter that we have to go on, it’s not something that’s going to kill people. Click here to read their paper, which is much more technical than the article linked above.

IBM quantum computer

Meanwhile, here’s the latest news in quantum mechanics and its application in computer science. Also, you might be interested in looking at this other article from a few weeks earlier, which gives more information about what a “quantum computer” is. I honestly find it pretty confusing. Quantum mechanics seems like a very abstract, theoretical thing to me, so I can’t quite wrap my mind around the fact that it has applications in something as real and concrete as computer hardware.

Moving on to neuroscience, let’s talk about sensory processing, specifically our sense of hearing. This study indicates that humans respond differently to music than monkeys do. Essentially, humans are more neurologically inclined to listen specifically to musical tone, while monkeys perceive music as just another noise. Interestingly enough, this research project was inspired by similar research on visual perception that produced very different results. Monkeys evidently do interpret visual input in the same way that humans do, and in fact, their reaction to non-musical sounds is also equivalent to that of a human. So, to put it simply, music appreciation itself is perhaps specifically a human trait. If this experiment is replicated with other types of animals, I’d be interested in those results.

Meanwhile, a completely unrelated study has demonstrated that there’s a difference between listening to someone’s voice and listening to the words they’re saying. And no, the difference isn’t a matter of paying attention. In this experiment, participants’ brains literally processed vocal sounds differently depending upon whether the subject had been asked to distinguish different voices or to identify the phonemes. (A phoneme is an individual sound, such as /p/ or /t/ ) Rather than using actual words, researchers used meaningless combinations of phonemes, like “preperibion” and “gabratrade”, presumably to isolate the auditory neurological processes from the language comprehension neurological processes. This experiment was all about the sounds, not semantics. Still, I would imagine that the most relevant takeaway from this study is related to language. In order to function in society, we need to instinctively recognize a familiar word or phrase by its phonemes regardless of who is saying it. Otherwise, we would have to relearn vocabulary every time we meet a new person.

This leads me to some other interesting information about language and linguistics. The Max Planck Institute for Psycholinguistics suggests that the complexity of the grammar in any particular language is determined by the population size of the group in which the language developed. (That is, languages with more speakers have simpler grammatical rules) This isn’t a new theory, in fact, it’s fairly well established that there’s a correlation between a language’s simplicity and its current population of speakers. But for the social experiment described in this article, the question is how and why this is the case.

This leads me to some other interesting information about language and linguistics. The Max Planck Institute for Psycholinguistics suggests that the complexity of the grammar in any particular language is determined by the population size of the group in which the language developed. (That is, languages with more speakers have simpler grammatical rules) This isn’t a new theory, in fact, it’s fairly well established that there’s a correlation between a language’s simplicity and its current population of speakers. But for the social experiment described in this article, the question is how and why this is the case.

Participants in this study had to make up their own languages over the course of several hours. They played a guessing game in which people paired up and took turns using made-up nonsense words to describe a moving shape on a computer screen while another person had to try to figure out what the shape was and which direction it was moving. They would switch partners occasionally, resulting in groups of different sizes even though the communication was only between two people at a time. Initially, both the descriptions and the guesses were completely random, but eventually, the participants would develop some consistency of vocabulary and grammar within their group.

The results were as expected. Larger groups developed consistent, simple, and systematic grammatical rules because communication was impossible otherwise. Some of the smaller groups didn’t even get as far as developing grammatical rules at all, just some vocabulary, and those that did use some form of grammar didn’t necessarily have very logical or clearly defined rules. You can make do without consistent grammatical rules when you’re only trying to coordinate communication between a few people. Additionally, the smaller groups produced languages that were entirely unique, while the larger groups tended to develop languages that resembled those of other groups.

Of course, it’s questionable whether an experiment like this is an accurate representation of real-world languages. Languages develop over many generations, not just a few hours, and even uncommon languages have thousands of speakers. (Yes, there are some languages that only have a few living native speakers left, but in those cases, the language used to have many more speakers and has been gradually dying out. The number relevant to this research isn’t the current amount of native speakers, it’s the population size in which the language originated.) It’s an interesting experiment, though, and it’s at least plausible that it did an accurate job of replicating the social processes by which a language’s grammar is formed.

This research from Princeton University looks at a different subfield of psycholinguistics: language acquisition in early childhood. Researchers were curious about how a toddler figures out whether a new vocabulary word has a broad or specific meaning. For example, if you show a child a picture of a blowfish and teach him or her the word “blowfish,” will the child mistakenly apply that word to other types of fish, or will the child understand that the word “blowfish” only refers to the very specific kind of fish in the picture? (The article uses this example to coin the term “blowfish effect”) Toddlers are surprisingly good at this. Sure, they get it wrong every now and then, but think about it. How often have you heard a child say “bread” when they’re talking about some other type of food, or “ball” when they’re talking about some other type of toy? Those kinds of mistakes don’t happen nearly as often as they would if this type of learning was entirely trial-and-error.

This research from Princeton University looks at a different subfield of psycholinguistics: language acquisition in early childhood. Researchers were curious about how a toddler figures out whether a new vocabulary word has a broad or specific meaning. For example, if you show a child a picture of a blowfish and teach him or her the word “blowfish,” will the child mistakenly apply that word to other types of fish, or will the child understand that the word “blowfish” only refers to the very specific kind of fish in the picture? (The article uses this example to coin the term “blowfish effect”) Toddlers are surprisingly good at this. Sure, they get it wrong every now and then, but think about it. How often have you heard a child say “bread” when they’re talking about some other type of food, or “ball” when they’re talking about some other type of toy? Those kinds of mistakes don’t happen nearly as often as they would if this type of learning was entirely trial-and-error.

Apparently, the prevalent theory has previously been that children always learn general words before learning specific words, presumably because the general words tend to be more commonly spoken and shorter. (I deliberately used only one-syllable examples at the end of the previous paragraph, but it is true that one-syllable nouns tend to have more general meanings than multi-syllable nouns) However, as anyone who spends much time around children can tell you, some children do learn some very specific words, most often nouns, at a very young age. I’ve seen children as young as three accurately identify specific objects, as represented by toys or pictures, such as different types of construction vehicles or different kinds of dinosaurs. In fact, in my experience, a small child is more likely to confuse two words with specific meanings (such as “tiger” and “lion”, or “bulldozer” and “dump truck”) than to confuse a general word with a more specific word. (Such as “tiger” and “cat” or “bulldozer” and “truck”) So there’s clearly some method to distinguishing general terms from specific terms, and it makes sense to study how kids learn these things.

Researchers evaluated this by using pictures to teach made-up one-syllable words to children and then asking the children to identify more pictures that correspond to that word. They did the same thing with young adults and found that the toddlers and the adults performed similarly. There seemed to be two rules that determined whether the subject interpreted the word as a specific term or a general term. First, the person uses what knowledge they have of the outside world to determine whether the thing in question looks normal or unusual. A blowfish is a distinctive looking creature, so a child is more likely to associate its name with the specific species than to extrapolate the meaning and call other types of fish a “blowfish,” but that same child might perceive a goldfish to be a very normal fish and might mistakenly use the word “goldfish” for other fish.

The other rule has to do with variety. If you show a child a picture of three different kinds of dogs and teach the child that those are called dogs, the child will easily understand that dogs come in a variety of sizes and appearances. But if you showed the same child a picture of three dalmatians and teach them the word dog, that child might think that only a dalmatian is a “dog”. In real life, I would imagine that this applies across a period of time. If the child learns that a certain dalmatian is a “dog” one day and then learns that a certain dachshund is a “dog” a few days later, and then later hears Grandma call her golden retriever a “dog”, that child will understand that “dog” is a general word that encompasses many animals. But if the child doesn’t know any dogs other than Grandma’s golden retriever, he or she may not recognize a dalmation or a dachshund as a dog for months or even years after learning the word “dog”.

The other rule has to do with variety. If you show a child a picture of three different kinds of dogs and teach the child that those are called dogs, the child will easily understand that dogs come in a variety of sizes and appearances. But if you showed the same child a picture of three dalmatians and teach them the word dog, that child might think that only a dalmatian is a “dog”. In real life, I would imagine that this applies across a period of time. If the child learns that a certain dalmatian is a “dog” one day and then learns that a certain dachshund is a “dog” a few days later, and then later hears Grandma call her golden retriever a “dog”, that child will understand that “dog” is a general word that encompasses many animals. But if the child doesn’t know any dogs other than Grandma’s golden retriever, he or she may not recognize a dalmation or a dachshund as a dog for months or even years after learning the word “dog”.

I’ve veered away from the article a little and am making up my own examples now, but the point is the same. Young children use context cues to apply new vocabulary, and they do so using the same cognitive skills that an adult would. Because this happens at a subconscious level, we don’t recognize just how intelligent toddlers are. We’re more likely to be amused at their linguistic errors than impressed at their language acquisition, but it’s pretty incredible just how rapidly children develop vocabulary between the ages of two and about five.

In further baby-related news, researchers have demonstrated that children as young as six months old can display empathy when presented with video clips of anthropomorphic shapes. One such clip shows a circle character and a rectangle character walking together without any bullying behavior, and another clip shows the circle character pushing the rectangle character down a hill, at which point the rectangle cries. (Ya gotta watch out for those circles, buddy!) When the babies were later given a tray containing these anthropomorphic shapes, they displayed a preference for the poor rectangle who had been so cruelly bullied.

The University of Illinois at Urbana-Champaign has conducted another recent study that also involved making babies watch characters bullying each other. These child researchers are a positive bunch. This study involved older children (the summary says “infants 17 months of age”, which sounds like a contradiction of terms to me) and evaluated whether children this young perceive social hierarchies and power dynamics. The answer is evidently yes. The researchers measured the babies’ degree of surprise or confusion by how long they stared at the scenario played out before them with bear puppets. If this staring is in fact an accurate way to determine what the baby does or doesn’t expect, then the subjects displayed an expectation that a puppet who acts as a leader will step in to prevent wrongdoing. There were several variations on the skit, and researchers noted that the babies didn’t seem to expect this same corrective reaction from a character who was not presented as a leader.

The University of Illinois at Urbana-Champaign has conducted another recent study that also involved making babies watch characters bullying each other. These child researchers are a positive bunch. This study involved older children (the summary says “infants 17 months of age”, which sounds like a contradiction of terms to me) and evaluated whether children this young perceive social hierarchies and power dynamics. The answer is evidently yes. The researchers measured the babies’ degree of surprise or confusion by how long they stared at the scenario played out before them with bear puppets. If this staring is in fact an accurate way to determine what the baby does or doesn’t expect, then the subjects displayed an expectation that a puppet who acts as a leader will step in to prevent wrongdoing. There were several variations on the skit, and researchers noted that the babies didn’t seem to expect this same corrective reaction from a character who was not presented as a leader.

That just about covers it for the science news about babies’ minds, but I’ve got plenty more stuff about minds in general. For example, we’re a step closer to telepathic multiplayer video games. Also, want to see the most detailed images of a human brain that scientists have ever gotten? Then watch this youtube video. But if you’re having an MRI done anytime soon, don’t expect this kind of image clarity. This scan was done on a post-mortem brain and it took 100 hours.

The research described in this next article has identified the brain area responsible for the uncanny valley. I’ve mentioned the so-called “uncanny valley” before; it’s an interesting phenomenon that has relevance in discussions of technology (specifically AI) or psychology, and I would argue that it has significant applications in literature and the arts. In case you aren’t inclined to look it up or click the hyperlink to my previous blog post, I’ll clarify that the “uncanny valley” refers to the fact that people tend to find non-human things creepy if they look too much like humans.

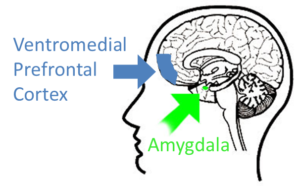

This recent research demonstrates that this creepy feeling comes from the ventromedial prefrontal cortex, (VMPFC) the brain region that is essentially right behind your eyes. This is not surprising because the roles of the VMPFC have to do with processing fear, evaluating risks, and making decisions. The VMPFC regulates the fight-or-flight reaction generated in another brain region called the amygdala. If you walk into a dimly lit room and see a spooky face staring at you, it’s your amygdala that’s responsible for that immediate, instinctive moment of panic. You feel a jolt of fear, your heart rate suddenly goes up, you probably physically draw back… and then you realize that there’s a mirror on the wall. The spooky face is your own reflection, and it only looks spooky because of the dim lighting. You can thank your VMPFC for assessing the situation and figuring out that there’s no legitimate danger before you had a chance to act on that fight-or-flight response and run away from your own reflection like an idiot.

This recent research demonstrates that this creepy feeling comes from the ventromedial prefrontal cortex, (VMPFC) the brain region that is essentially right behind your eyes. This is not surprising because the roles of the VMPFC have to do with processing fear, evaluating risks, and making decisions. The VMPFC regulates the fight-or-flight reaction generated in another brain region called the amygdala. If you walk into a dimly lit room and see a spooky face staring at you, it’s your amygdala that’s responsible for that immediate, instinctive moment of panic. You feel a jolt of fear, your heart rate suddenly goes up, you probably physically draw back… and then you realize that there’s a mirror on the wall. The spooky face is your own reflection, and it only looks spooky because of the dim lighting. You can thank your VMPFC for assessing the situation and figuring out that there’s no legitimate danger before you had a chance to act on that fight-or-flight response and run away from your own reflection like an idiot.

But the VMPFC also plays the reverse role. Even when your amygdala isn’t reacting to a perceived danger, your VMPFC is analyzing potential risks for the purpose of making decisions. It considers risks other than immediate danger, which include the long-term effects of a decision or the impact that your actions might have on another person. You may have heard of Phineas Gage, a railroad worker who sustained severe brain damage in 1848 and came out of it alive, but with a completely changed personality. It was his VMPFC that was impaired. As a result, his behavior became volatile and he displayed a loss of conscience. While it would be overly simplistic to say that your ventromedial prefrontal cortex is your conscience, it is the region of your brain that you use when you’re making those types of decisions. It’s also responsible for considering risks when you’re making decisions that could impact intangible and non-immediate things like your relationships, social standing, or financial situation. These are all things that are probably very important to you, but because they’re conceptual and don’t involve immediate physical danger, situations that pose a threat to them usually don’t generate that fight-or-flight response from your amygdala.

All of this is to say that it stands to reason that the VMPFC is the brain region responsible for generating the general feeling of unease described by the uncanny valley. If you’re having a conversation with a machine that has artificial intelligence and a human-like appearance, you’re not in immediate, obvious, physical danger. Unless you’ve previously had some kind of traumatic experience with AI-endued robots, your amygdala will probably not be generating the kind of fear that makes you want to run away screaming. But the situation is bizarre enough that your VMPFC remains wary. It’s anticipating the possibility of risks and decisions that require conscious thought rather than instinctive action. And that anticipation translates to what is best described as a creepy feeling.

All of this is to say that it stands to reason that the VMPFC is the brain region responsible for generating the general feeling of unease described by the uncanny valley. If you’re having a conversation with a machine that has artificial intelligence and a human-like appearance, you’re not in immediate, obvious, physical danger. Unless you’ve previously had some kind of traumatic experience with AI-endued robots, your amygdala will probably not be generating the kind of fear that makes you want to run away screaming. But the situation is bizarre enough that your VMPFC remains wary. It’s anticipating the possibility of risks and decisions that require conscious thought rather than instinctive action. And that anticipation translates to what is best described as a creepy feeling.

Finally, as we all know, you can’t tell how smart a person is by what their brain looks like… right? Well, if you use a special type of MRI technique in combination with mathematical algorithms, you can evidently figure out what the network of neural pathways looks like in a particular brain. As is probably obvious, intelligent thought depends upon the efficiency of these neural pathways. Thoughts and ideas literally move through the brain via electrical and chemical signals, and in order to comprehend a new concept or come up with a bright and original idea, you need various parts of your brain to work together. Sure enough, these researchers determined that people with greater general knowledge had more efficient neural pathways. You still can’t tell how smart a person is just by looking at them, but technically, there is a potentially discernable difference between a brain that is smart and one that isn’t.